Calling stored procedures on SQL Server with parameters

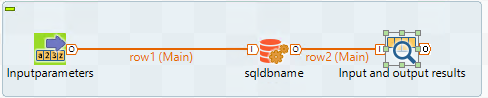

In order to call stored procedures in Talend we need to configure a source for the various parameters e.g. with a tFixedFlowInput as shown below

tfixedFlowInput details

In the tFixedFlowInput we need to create a schema matching the parameters on the stored procedure and then use tick “Use Single Table”

Create a column for each input parameter and set the value you wish to use (note that this can actually be sourced from e.g. an iteration loop instead of a fixed flow to get the details from the database)

Connect the input source to a tdbSP connection

tDBSP Connection Details

Drag a component from the Db Connections meta data for the database / server that you wish to execute the stored procedure on and configure as follows :-

SO Name = Name of the stored procedure to be executed enclosed in quotes

Add parameters using the + button under the parameters section of the tDBSP connection and map them to the schema column name from the input (tFixedFlowInput in this example)

Define the direction of the parameter in the Type column e.g. IN / OUT / RECORD SET

The example above just dumps the parameters and results into the log output